In medical technology, developing and utilizing large language models (LLMs) are increasingly pivotal. These advanced models can digest and interpret vast quantities of medical texts, offering insights that traditionally require extensive human expertise. The evolution of these technologies holds the potential to lower healthcare costs significantly and expand access to medical knowledge across various demographics.

A growing challenge within this technological sphere is the lack of competitive open-source models that can parallel the performance of proprietary systems. Open-source healthcare LLMs are crucial as they promote transparency and innovation accessibility, which are essential for equitable healthcare technology advancements.

Traditionally, healthcare LLMs are enhanced through continued pre-training on extensive domain-specific datasets and fine-tuning for particular tasks. However, these techniques often do not scale effectively with the increase in model size and data complexity, which limits their practical applicability in real-world medical scenarios.

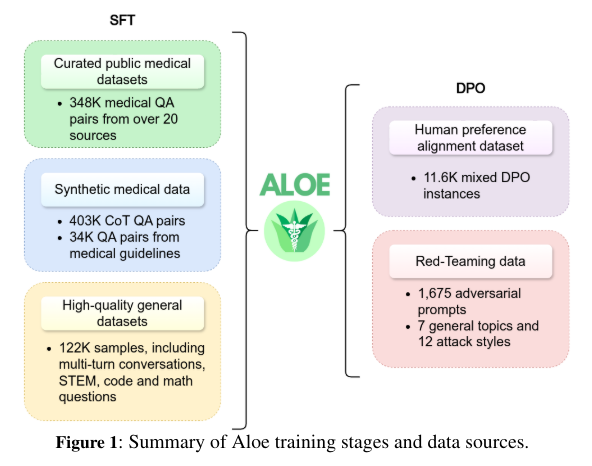

Researchers from the Barcelona Supercomputing Center (BSC) and Universitat Politècnica de Catalunya – Barcelona Tech (UPC) have developed the Aloe models, a new series of healthcare LLMs. These models employ innovative strategies such as model merging and instruct tuning, leveraging the best features of existing models and enhancing them through sophisticated training regimens on both public and proprietary synthesized datasets. The Aloe models are trained using a novel dataset that includes a mixture of public data sources and synthetic data generated through advanced Chain of Thought (CoT) techniques.

The technological backbone of the Aloe models involves integrating various new data processing and training strategies. For instance, they use an alignment phase with Direct Preference Optimization (DPO) to align the models ethically, and their performance is tested against numerous bias and toxicity metrics. The models also undergo a rigorous red teaming process to assess potential risks and ensure their safety in deployment.

The performance metrics of the Aloe models have achieved state-of-the-art benchmarks against other open models, significantly outperforming them in medical question-answering accuracy and ethical alignment. For instance, in evaluations involving medical benchmarks like MedQA and PubmedQA, the Aloe models reported improvements in accuracy by over 7% compared to prior open models, demonstrating their superior capability in handling complex medical inquiries.

In conclusion, the Aloe models signify a breakthrough in applying LLMs within the healthcare sector. By merging cutting-edge technologies and ethical considerations, these models enhance the accuracy and reliability of medical data processing and ensure that advancements in healthcare technologies are accessible and beneficial to all. The introduction of such models marks a critical step towards democratizing sophisticated medical knowledge and enhancing the global healthcare landscape through improved decision-making tools that are both effective and ethically aligned.

Check out the Paper and Model. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.