The exploration of AI has progressively focused on simulating human-like interactions through sophisticated AI systems. The latest innovations aim to harmonize text, audio, and visual data within a single framework, facilitating a seamless blend of these modalities. This technological pursuit seeks to address the inherent limitations observed in prior models that processed inputs separately, often resulting in delayed responses and disjointed communicative experiences.

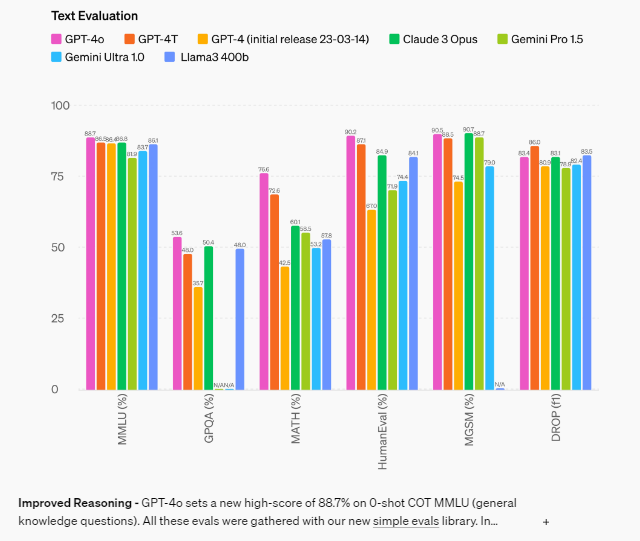

Traditional AI architectures typically compartmentalize the handling of diverse data types, operating through distinct subsystems for text, audio, and visuals. This disjointed approach not only slows down the system’s ability to react in real-time but also complicates the integration of coherent responses across different communication formats. For instance, prior models, such as GPT-3.5 and GPT-4, exhibited average latencies of 2.8 and 5.4 seconds, respectively, in voice interactions, reflecting a clear gap in achieving fluid human-like exchanges.

OpenAI’s research team has developed GPT-4o, a state-of-the-art model that amalgamates text, audio, and visual data processing capabilities into a unified framework. Dubbed ‘omni’ for its all-encompassing functionality, GPT-4o is engineered to drastically reduce the latency of responses to an average of 320 milliseconds, closely mirroring human reaction times in conversations. The integration allows the AI to effectively interpret and generate information across multiple formats, making it adept at handling complex interactive scenarios previously challenging for segmented models.

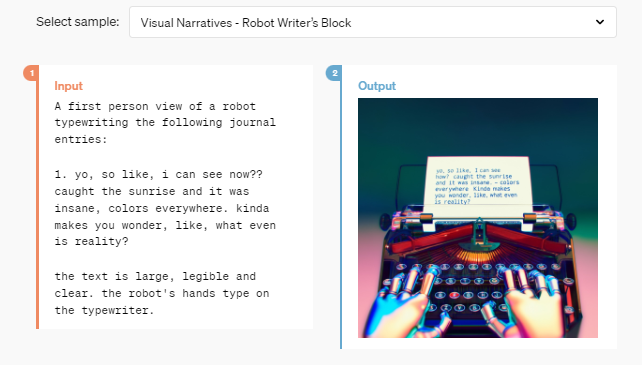

GPT-4o is particularly notable for its integrated functionalities that greatly enhance user interaction. For instance:

- It allows users to take a photo of a text in a foreign language and receive instant translation and contextual information about the text.

- The model supports engaging in more natural voice interactions and will soon facilitate real-time video conversations, enabling users to, for example, receive live explanations of sports rules during a game.

GPT-4o’s methodology is rooted in a single neural network architecture that processes all inputs and outputs, irrespective of their modality. This holistic design enhances processing speed and improves cost efficiency, with the model being 50% cheaper to operate than its predecessors. GPT-4o excels in understanding non-English languages and multilingual contexts, reducing token usage by up to 4.4 times in languages like Gujarati and showcasing a broadened accessibility and application spectrum.

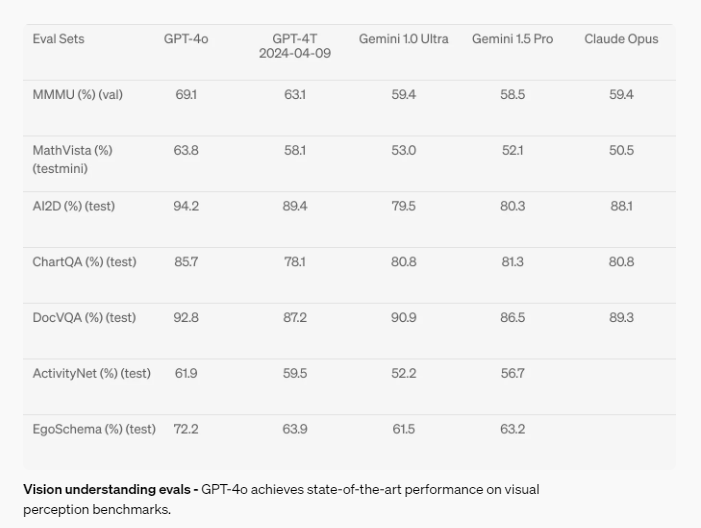

Performance evaluations of GPT-4o reveal substantial advancements over earlier models. GPT-4o offers support in over 50 languages, significantly widening its accessibility and utility across different regions. The model achieves parity with GPT-4 Turbo in English text and coding tasks while setting new benchmarks in multilingual, audio, and visual capabilities. In practical terms, GPT-4o demonstrates an impressive ability to respond to audio inputs in as little as 232 milliseconds and to manage interactive exchanges with comparable adeptness to human participants.

There have been additional features for free users, offering them some cool new features in the latest release. Key advancements for ChatGPT free users include:

- Access to GPT-4 level intelligence for enhanced response quality.

- The capability to receive answers from both the AI model and the internet for a comprehensive understanding.

- Features to analyze data, create charts, and engage in detailed discussions about uploaded images.

- Options to summarize documents, assist in drafting content, and analyze uploaded files, enriching the user’s interaction with digital content.

- The launch of GPTs and the GPT Store offers tailored AI functionalities.

The rollout of these features to users without subscription fees underscores a commitment to democratizing advanced technology. GPT-4o has already been made available to ChatGPT Plus and Team users, and plans are underway to extend these capabilities to ChatGPT Free users subject to manageable usage limits.

In conclusion, the introduction of GPT-4o and its subsequent deployment to free users marks a pivotal moment in AI accessibility. It encapsulates the dual goals of advancing AI technology and making it universally accessible, thereby minimizing the digital divide. This strategy enhances the user experience by offering sophisticated, multilingual, and multi-functional AI tools. It ensures that these advanced technologies benefit a global audience, promoting a more inclusive future for digital interaction.